Would you like to make this site your homepage? It's fast and easy...

Yes, Please make this my home page!

inside the computer

Inside The Computer

2-1 Data Storage: A Bit About the Bit

INTERNET BKIDGE A computer can have seemingly limitless capabilities. It's

an entertainment center with hundreds of interactive games. It's a virtual

school or university providing interactive instruction and testing in everything

from anthropology to zoology. It's a painter's canvas. It's a video telephone.

It's a CD player. It's a home or office library giving ready access to the

complete works of Shakespeare or interactive versions of corporate procedures

manuals. It's a television. It's the biggest marketplace in the world. It's

a medical instrument capable of monitoring vital signs. It's the family photo

album. It's a print shop. It's a wind tunnel that can test experimental airplane

designs. It's a voting booth. It's a calendar with a to-do list. It's a recorder.

It's an alarm clock that can remind you to pick up the kids. It's an encyclopedia.

It can perform thousands of specialty functions that require specialized skills:

preparing taxes, drafting legal documents, counseling suicidal patients, and

much more.

In all of these applications, the computer deals with everything as electronic

signals. Electronic signals come in two flavors—analog and digital. Analog

signals are continuous wave forms in which variations in frequency and amplitude

can be used to represent information from sound and numerical data. The sound

of our voice is carried by analog signals when we talk on the telephone. With

digital signals, everything is described in two states: the circuit as either

on or off. Generally, the on state is expressed or represented by the number

1 and the off state by the number 0. Computers are digital and, therefore,

require digital data. To make the most effective use of computers and automation,

most everything in the world of electronics and communication is going digital.

Going Digital

A

by-product of the computer revolution is a. trend toward "going digital"

whenever possible. For example, the movement in the recording industry has been

away from analog recording on records and toward digital recording on CD. One

reason for this move is that analog signals cannot be reproduced exactly. If

you have ever duplicated an analog audiotape, you know that the copy is never

as good as the source tape. In contrast, digital CDs can be copied onto other

CDs over and over without deterioration. When CDs are duplicated, each is an

exact copy of the original.

So how do you go digital? You simply need to digitize your material. To digitize

means to convert data, analog signals, images, and so on into the discrete format

(Is and Os) that can be interpreted by computers. For example, Figure 2-1 shows

how music can be digitized. Once digitized, the music recording, data, image,

shape, and so on can be manipulated by the computer. For example, old recordings

of artists from Enrico Caruso to the Beatles have been digitized and then digitally

reconstructed on computers to eliminate unwanted distortion and static. Some

of these reconstructed CDs are actually better than the originals!

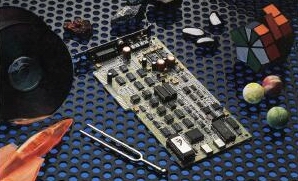

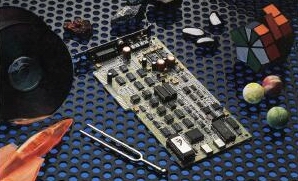

Digital

sound information is stored temporarily in the RAM chips on this circuit board

(center) which enables stereo sound output from a PC. Sound information can

be stored permanently on magnetic disk or optical laser disk (left). ATI

Binary Digits: On-Bits and Off-Bits

It's

amazing, but the seemingly endless potential of computers is based on the two

digital states—on and off. The electronic nature of the computer makes

it possible to combine these two electronic states to represent letters, numbers,

colors, sounds, images, shapes, and much more—even odors. An "on"

or off electronic state is represented by a bit, short for ftnary digit. In

the binary numbering system (base 2), the on-bit is a 1 and the off-bit is a

0.

Vacuum tubes, transistors, and integrated circuits characterize the generations

of computers. Each of these technologies enables computers to distinguish between

on and off and, therefore, to use binary logic. Physically, these states are

achieved in a variety of ways.

• In RAM, the two electronic states often are represented by the presence

or absence of an electrical charge.

• In disk storage, the two states are made possible by the magnetic arrangement

of the surface coating on magnetic tapes and disks.

Going Digital with Compact Discs

The recording industry has gone digital. To create a master CD, analog signals

are converted to digital signals that can be manipulated by a computer and

written to a master CD. The master is duplicated and the copies are sold through

retail channels.

• In CDs and CD-ROMs, digital data are stored permanently

as microscopic pits.

• In fiber optic cable, binary data flows through as pulses of light.

Bits may be fine for computers, but human beings are more comfortable with

letters and decimal numbers (the base-10 numerals 0 through 9). We like to

see colors and hear sounds. Therefore, the letters, decimal numbers, colors,

and sounds that we input into a computer system while doing word processing,

graphics, and other applications must be translated into Is and Os for processing

and storage. The computer translates the bits back into letters, decimal numbers,

colors, and sounds for output on monitors, printers, speakers, and so on.

Data in a Computer

To

manipulate stored data within a computer system, we must have a way of storing

and retrieving it. Data are stored temporarily during processing in random-access

memory (RAM). RAM is also referred to as primary storage. Data are stored permanently

on secondary storage devices such as magnetic tape and disk drives. We discuss

primary storage (RAM) in detail in this chapter, as well as how data are represented

electronically in a computer system and on the internal workings of a computer.

Encoding Systems: Bits and Bytes

Computers

do not speak to one another in English, Spanish, or French. They have their

own languages, which are better suited to electronic communication. In these

languages, bits are combined according to an encoding system to represent letters

(alpha characters), numbers (numeric characters), and special characters (such

as *, $, +, and &), collectively referred to as alphanumeric characters.

ASCII and ANSI

ASCII

(American Standard Code for /nformation interchange—pronounced "AS-key")

is the most popular encoding system for PCs and data communication. In ASCII,

alphanumeric characters are encoded into a bit configuration on input so that

the computer can interpret them. This coding equates a unique series of on-bits

and off-bits with a specific character. Just as the words mother and father

are arbitrary English-language character strings that refer to our parents,

1000010 is an arbitrary ASCII code that refers to the letter B. When you tap

the letter B on a PC keyboard, the B is transmitted to the processor as a coded

string of binary digits (for example, 1000010 in ASCII), as shown in Figure

2-3. The characters are decoded on output so we can interpret them. The combination

of bits used to represent a character is called a byte (pronounced "bite").

The

7-bit ASCII code can represent up to 128 characters (27). Although the English

language has considerably fewer than 128 printable characters, the extra bit

configurations are needed to represent additional common and not-so-common special

characters (such as - [hyphen]; @ [at]; I [a broken vertical bar]; and [tilde])

and to signal a variety of activities to the computer (such as ringing a bell

or telling the computer to accept a piece of datum).

ASCII is a 7-bit code, but the PC byte is 8 bits. There are 256 (28) possible

bit configurations in an 8-bit byte. Hardware and software vendors accept the

128 standard ASCII codes and use the extra 128 bit configurations to represent

control characters or noncharacter images to complement their hardware or software

product. For example, the IBM-PC version of extended ASCII contains the characters

of many foreign languages (such as a [umlaut] and e [acute]) and a wide variety

of graphic images that can be combined on a text screen to produce larger images

(for example, the box around a window on a display screen).

Microsoft Windows uses the 8-bit ANSI encoding system (developed by the American

National Standards /nstitute) to enable the sharing of text between Windows

applications. As in IBM, extended ASCII, the first 128 ANSI codes are the same

as the ASCII codes, but the next 128 are defined to meet the specific needs

of Windows applications.

Unicode: 65,536 Possibilities

A

consortium of heavyweight computer industry companies, including IBM, Microsoft,

and Sun Microsystems, is sponsoring the development of Unicode, a uniform 16-bit

encoding system. Unicode will enable computers and applications to talk to one

another more easily and will accommodate most languages of the world (including

Hebrew, Japanese if, and upper- and lower-case Greek i|i). ASCII, with 128 (27)

character codes, is sufficient for the English language but falls far short

of the Japanese language requirements. Unicode's 16-bit code allows for 65,536

characters (216). The consortium is proposing that Unicode be adopted as a standard

for information interchange throughout the global computer community. Universal

acceptance of the Unicode standard would help facilitate international communication

in all areas, from monetary transfers between banks to e-mail. With Unicode

as a standard, software could be created more easily to work with a wider base

of languages.

Unicode, like any advancement in computer technology, presents conversion problems.

The 16-bit Unicode demands more memory than do traditional 8-bit codes. An A

in Unicode takes twice the RAM and disk space of an ASCII A. Currently, 8-bit

encoding systems provide the foundation for most software packages and existing

databases. Should Unicode be adopted as a standard, programs would have to be

revised to work with Unicode, and existing data would need to be converted to

Unicode. Information processing needs have simply outgrown the 8-bit standard,

so conversion to Unicode or some other standard is inevitable. This conversion,

however, will be time-consuming and expensive.Creating text for a video display

calls for a technology called "character generation." An 8-bit encoding

system, with its 256 unique bit configurations, is more than adequate to represent

all of the alphanumeric characters used in the English language. The Chinese,

however, need a 16-bit encoding system, like Unicode, to represent their 13,000

characters.

.

Analyzing a Computer System, Bit by Bit

Analyzing a Computer System, Bit by Bit

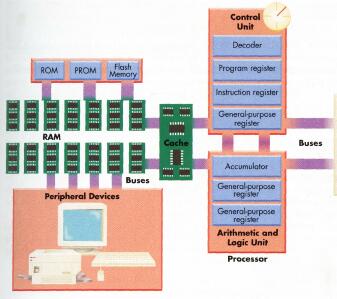

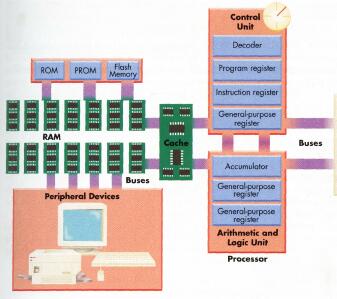

The processor runs the show and is the nucleus of any computer system. Regardless

of the complexity of the hundreds of different computers sold by various manufacturers,

each processor, sometimes called the central processing unit or CPU, has only

two fundamental sections: the control unit and the arithmetic and logic unit.

These units work together with random-access memory (RAM) to make the processor—and

the computer—go. Let's look at their functions and the relationships among

them.

RAM: Random-Access Storage

RAM TECHNOLOGY: SDRAM AND RDRAM

RAM, a read-and-write memory, enables data to be both read and written to

memory. RAM (primary storage) is electronic circuitry with no moving parts.

Electrically charged points in the RAM chips represent the bits (Is and Os)

that comprise the data and other information stored in RAM. With no mechanical

movement, data can be accessed from RAM at electronic speeds. Over the past

two decades, researchers have given us a succession of RAM technologies, each

designed to keep pace with ever-faster processors. Existing PCs have FPM RAM,

EDO RAM, SRAM, DRAM, BEDO RAM, and other types of memory. However, most new

PCs are being equipped with synchronous dynamic RAM (SDRAM). SDRAM is able

to synchronize itself with the processor, enabling data transfer at more than

twice the speed of previous RAM technologies. With the next generation of

processors, we'll probably move to Rambus DRAM (RDRAM), which is six times

faster than SDRAM.

A state-of-the-art SDRAM memory chip, smaller than a postage stamp, can store

about 128,000,000 bits, or more than 12,000,000 characters of data! Physically,

memory chips are installed on single in-line memory modules, or SIMMs, and

on the newer dual in-line memory modules, or DIMMS. SIMMS are less expensive,

but have only a 32-bit data path to the processor, whereas DIMMs have a 64-bit

data path.

There is one major problem with RAM storage: It is volatile memory. That is,

when the electrical current is turned off or interrupted, the data are lost.

Because RAM is volatile, it provides the processor only with temporary storage

for programs and data. Several nonvolatile memory technologies have emerged,

but none has exhibited the qualities necessary for widespread use as primary

storage (RAM). Although much slower than RAM, nonvolatile memory is superior

to SDRAM for use in certain computers because it is highly reliable, it is

not susceptible to environmental fluctuations, and it can operate on battery

power for a considerable length of time. For example, it is well suited for

use with industrial robots.

READIN', 'RITIN', AND RAM CRAM All programs and data must be transferred to

RAM from an input device (such as a keyboard) or from secondary storage (such

as a disk) before programs can be executed and data can be processed. Therefore,

RAM space is always at a premium. Once a program is no longer in use, the

storage space it occupied is assigned to another program awaiting execution.

PC users attempting to run too many programs at the same time face RAM cram,

a situation in which there is not enough memory to run the programs. Programs

and data are loaded to RAM from secondary storage because the time required

to access a program instruction or piece of datum from RAM is significantly

less than from secondary storage. Thousands of instructions or pieces of data

can be accessed from RAM in the time it would take to access a single piece

of datum from disk storage. RAM is essentially a high-speed holding area for

data and programs. In fact, nothing really happens in a computer system until

the program instructions and data are moved from RAM to the processor.

Interaction

between Computer System Components

During processing, instructions and data are passed between the various types

of internal memories, the processor's control unit and arithmetic and logic

unit, the coprocessor, and the peripheral devices over the common electrical

bus. A system clock paces the speed of operation within the processor and ensures

that everything takes place in timed intervals. Refer back to this figure as

you read about these components throughout the remainder of this chapter.

illustrates

how all input/output (I/O) is "read to" or "written from"

RAM. Programs and data must be "loaded," or moved, to RAM from secondary

storage for processing. This is a nondestructive read process; that is, the

program and data that are read reside in both RAM (temporarily) and secondary

storage (permanently) .

The data in RAM are manipulated by the processor according to program instructions.

A program instruction or a piece of datum is stored in a specific RAM location

called an address. RAM is analogous to the rows of boxes you see in post offices.

Just as each P.O. box has a number, each byte in RAM has an address. Addresses

permit program instructions and data to be located, accessed, and processed.

The content of each address changes frequently as different programs are executed

and new data are processed.

CACHE MEMORY

To

facilitate an even faster transfer of instructions and data to the processor,

computers are designed with cache memory (see Figure 2-5). Cache memory is used

by computer designers to increase computer system throughput. Throughput refers

to the rate at which work can be performed by a computer system. Like RAM, cache

is a high-speed holding area for program instructions and data. However, cache

memory uses internal storage technologies, such as SDRAM, that are much faster

(and much more expensive) than conventional RAM. With only a fraction of the

capacity of RAM, cache memory holds only those instructions and data that are

likely to be needed next by the processor. Cache memory is effective because,in

a typical session, the same data or instructions are accessed over and over.

The processor first checks cache memory for needed data and instructions, thereby

reducing the number of accesses to the slower SDRAM.

ROM, PROM, AND FLASH MEMORY

A special type of internal memory, called readonly memory (ROM), cannot be altered

by the user (see Figure 2-5). The contents of ROM (rhymes with "mom"),

a nonvolatile technology, are "hard-wired" (designed into the logic

of the memory chip) by the manufacturer and can be "read only." When

you turn on a microcomputer system, a program in ROM automatically readies the

computer system for use and produces the initial display-screen prompt.

A variation of ROM is programmable read-only memory (PROM). PROM is ROM into

which you, the user, can load read-only programs and data. Generally, once a

program is loaded to PROM, it is seldom, if ever, changed. ROM and PROM are

used in a variety of capacities within a computer system.

Flash memory is a type of PROM that can be altered easily by the user. Flash

memory is a feature of many new processors, I/O devices, and storage devices.

The logic capabilities of these devices can be upgraded by simply downloading

new software from a vendor-supplied disk to flash memory. Upgrades to early

processors and peripheral devices required the user to replace the old circuit

board or chip with a new one. The emergence of flash memory has eliminated this

time-consuming and costly method of upgrade. Look for nonvolatile flash memory

to play an increasing role in computer technology as its improvements continue

to close the gap between the speed and flexibility of CMOS RAM.

The Processor: Nerve Center

THE CONTROL UNIT

Just

as the processor is the nucleus of a computer system, the control unit is the

nucleus of the processor. It has three primary functions:

1. To read and interpret program instructions

2. To direct the operation of internal processor components

3. To control the flow of programs and data in and out of RAM

During program execution, the first in a sequence of program instructions is

moved from RAM to the control unit, where it is decoded and interpreted by the

decoder. The control unit then directs other processor components to carry out

the operations necessary to execute the instruction.

The control unit contains high-speed working storage areas called registers

that can store no more than a few bytes (see Figure 2-5). Registers handle instructions

and data at a speed about 10 times faster than that of cache memory and are

used for a variety of processing functions. One register, called the instruction

register, contains the instruction being executed. Other general-purpose registers

store data needed for immediate processing. Registers also store status information.

For example, the program register contains the RAM address of the next instruction

to be executed. Registers facilitate the movement of data and instructions between

RAM, the control unit, and the arithmetic and logic unit.

THE ARITHMETIC AND LOGIC UNIT

The

arithmetic and logic unit performs all computations (addition, subtraction,

multiplication, and division) and all logic operations (comparisons). The results

are placed in a register called the accumulator. Examples of computations include

the payroll deduction for social security, the day-end inventory, and the balance

on a bank statement. A logic operation compares two pieces of data, either alphabetic

or numeric. Based on the result of the comparison, the program "branches"

to one of several alternative sets of program instructions. For example, in

an inventory system each item in stock is compared to a reorder point at the

end of each day. If the inventory level falls below the reorder point, a sequence

of program instructions is executed that produces a purchase order.

Buses: The Processor's Mass Transit System

Just

as big cities have mass transit systems that move large numbers of people, the

computer has a similar system that moves millions of bits a second. Both transit

systems use buses, although the one in the computer doesn't have wheels. All

electrical signals travel on a common electrical bus. The term bus was derived

from its wheeled cousin because passengers on both buses (people and bits) can

get off at any stop. In a computer, the bus stops are the control unit, the

arithmetic and logic unit, internal memory (RAM, ROM, flash, and other types

of internal memory), and the device controllers (small computers) that control

the operation of the peripheral devices (see Figure 2-5).

The bus is the common pathway through which the processor sends/receives data

and commands to/from primary and secondary storage and all I/O peripheral devices.

Bits traveling between RAM, cache memory, and the processor hop on the address

bus and the data bus. Source and destination addresses are sent over the address

bus to identify a particular location in memory, then the data and instructions

are transferred over the data bus to or from that location.

Making the Processor Work

We

communicate with computers by telling them what to do in their native tongue—

the machine language.

THE MACHINE LANGUAGE

You

may have heard of computer programming languages such as Visual BASIC and C+

+ . There are dozens of these languages in common usage, all of which need to

be translated into the only language that a computer understands—its own

machine language. Can you guess how machine-language instructions are represented

inside the computer? You are correct if you answered as strings of binary digits.

THE MACHINE CYCLE: MAKING THE ROUNDS

Every

computer has a machine cycle. The speed of a processor is sometimes measured

by how long it takes to complete a machine cycle. The timed interval that comprises

the machine cycle is the total of the instruction time, or I-time, and the execution

time, or E-time (see Figure 2-6). The following actions take place during the

machine cycle

Instruction time

•

Fetch instruction. The next machine-language instruction to be executed is retrieved,

or "fetched," from RAM or cache memory and loaded to the instruction

register in the control unit.

• Decode instruction. The instruction is decoded and interpreted.

Execution time

•

Execute instruction. Using whatever processor resources are needed (primarily

the arithmetic and logic unit), the instruction is executed.

• Place result in memory. The results are placed in the appropriate memory

position or the accumulator.

Processor Design: There Is a Choice

CISC AND RISC:

MORE

IS NOT ALWAYS BETTER Most processors in mainframe computers and personal computers

have a CISC (complex instruction set computer) design. A CISC computer's machine

language offers programmers a wide variety of instructions from which to choose

(add, multiply, compare, move data, and so on). CISC computers reflect the evolution

of increasingly sophisticated machine languages. Computer designers, however,

are rediscovering the beauty of simplicity. Computers designed around much smaller

instruction sets can realize significantly increased throughput for certain

applications, especially those that involve graphics (for example, computer-aided

design). These computers have RISC (reduced instruction set computer) design.

The RISC processor shifts much of the computational burden from the hardware

to the software. Proponents of RISC design feel that the limitations of a reduced

instruction set are easily offset by increased processing speed and the lower

cost of RISC microprocessors.

PARALLEL PROCESSING: COMPUTERS WORKING TOGETHER

In

a single processor environment, the processor addresses the programming problem

sequentially, from beginning to end. Today, designers are building computers

that break a programming problem into pieces. Work on each of these pieces is

then executed simultaneously in separate processors, all of which are part of

the same computer. The concept of using multiple processors in the same computer

is known as parallel processing. In parallel processing, one main processor

examines the programming problem and determines what portions, if any, of the

problem can be solved in pieces (see Figure 2-7). Those pieces that can be addressed

separately are routed to other processors and solved. The individual pieces

are then reassembled in the main processor for further computation, output,

or storage. The net result of parallel processing is better throughput.

Computer designers are creating mainframes and supercomputers with thousands

of integrated microprocessors. Parallel processing on such a large scale is

referred to as massively parallel processing (MPP). These super-fast supercomputers

have sufficient computing capacity to attack applications that have been beyond

that of computers with traditional computer designs. For example, researchers

can now simulate global warming with these computers.

Describing the Processor: Distinguishing Characteristics

How

do we distinguish one computer from the other? Much the same way we'd distinguish

one person from the other. When describing someone we generally note gender,

height, weight, and age. When describing computers or processors we talk about

word size, speed, and the capacity of their associated RAM (see Figure 2-8).

For example, a computer might be described as a 64-bit, 450-MHz, 256-MB PC.

Let's see what this means.

Word Size: 16-, 32-, and 64-Lane Bitways

Just

as the brain sends and receives signals through the central nervous system,

the *"" **"»"*il 4M.MAAH3 processor sends and receives

electrical signals through its common electrical bus aword at a time. A word

describes the number of bits that are handled as a unit within a particular

computer system's bus or during internal processing.

Twenty years ago, a computer's word size applied both to transmissions through

the electrical bus and to all internal processing. This is no longer the case.

In some of today's computers, one word size defines pathways in the bus and

another word size defines internal processing capacity. Internal processing

involves the movement of data and commands between registers, the control unit,

and the arithmetic and logic unit (see Figure 2-5). Many popular computers have

64-bit internal processing but only a 32-bit path through the bus. For certain

input/output-oriented applications, a 64-bit computer with a 32-bit bus may

not realize the throughput of a full 64-bit computer.

The word size of modern microcomputers is normally 64 bits (eight 8-bit bytes).

Early PCs had word sizes of 8 bits (one byte) and 16 bits (two bytes). Workstations,

mainframes, and supercomputers have 64-bit word sizes and up.

Processor Speed: Warp Speed

A

tractor can go 12 miles per hour (mph), a minivan can go 90 mph, and a slingshot

drag racer can go 240 mph. These speeds, however, provide litde insight into

the relative capabilities of these vehicles. What good is a 240-mph tractor

or a 12-mph mini-van? Similarly, you have to place the speed of computers within

the context of their design and application. Generally, PCs are measured in

MHz, workstations and mainframes are measured in MIPS, and supercomputers are

measured in FLOPS.

MEGAHERTZ: MHz

The

PC's heart is its crystal oscillator and its heartbeat is the dock cycle. The

crystal oscillator paces the execution of instructions within the processor.

A micro's processor speed is rated by its frequency of oscillation, or the number

of clock cycles per second. Most modern personal computers are rated between

300 and 600 megahertz, or MHz (millions of clock cycles). The elapsed time for

one clock cycle is 1/frequency (1 divided by the frequency). For example, the

time it takes to complete one cycle on a 400-MHz processor is 1/400,000,000,

or 0.000000025 seconds, or

Many field sales representatives carry notebook PCs when they call on customers.

This Merck Human Health-U.S. representative uses computer-based detailing to

make a presentation on cholesterol reducer to a cardiologist. Computer data

help her better target information to physicians. Her notebook has a 64-bit

processor with a speed of 300 MHz and a RAM capacityof 1 28 MB.

The CRAY T90

supercomputer is the most powerful general-purpose computer ever built. General

purpose computers are capable of handling a wide range of applications. The

system is capable of crunching data at a peak rate of 64 GFLOPS (gigaflops).

One GFLOP equals one billion floating point logic operations per second. Courtesy

of E-Systems, Inc.

2.5 nanoseconds (2.5 billionths of a second). Normally several clock cycles

are required to fetch, decode, and execute a single program instruction. The

shorter the clock cycle, the faster the processor.

To properly evaluate the processing capability of a computer, you must consider

both the processor speed and the word size. A 64-bit computer with a 450-MHz

processor has more processing capability than does a 32-bit computer with a

450-MHz processor.

MIPS

Processing

speed may also be measured in MIPS, or millions of z'nstructions per second.

Although frequently associated with workstations and mainframes, MIPS is also

applied to high-end PCs. Computers operate in the 20- to 1000-MIPS range. A

100-MIPS computer can execute 100 million instructions per second.

FLOPS

Supercomputer

speed is measured in FLOPS—/loating point operations per second. Supercomputer

applications, which are often scientific, frequently involve floating point

operations. Floating point operations accommodate very small or very large numbers.

State-of-the-art supercomputers operate in the 500-GFLOPS to 3-TFLOPS range.

(A GFLOPS, gigaflops, is one billion FLOPS; a TFLOPS, or teraflops, is one trillion

FLOPS.)

RAM Capacity: Megachips

The

capacity of RAM is stated in terms of the number of bytes it can store. Memory

capacity for most computers is stated in terms of megabytes (MB). One megabyte

equals 1,048,576 (220) bytes. Memory capacities of modern PCs range from 32

MB to 512 MB. Memory capacities of early PCs were measured in kilobytes (KB).

One kilobyte is 1024 (210) bytes of storage.

Some high-end mainframes and supercomputers have more than 8000 MB of RAM. Their

RAM capacities are stated as gigabytes (GB)—about one billion bytes. It's

only a matter of time before we state RAM in terms of terabytes (TB)—about

one trillion bytes. GB and TB are frequently used in reference to high-capacity

secondary storage. Occasionally you will see memory capacities of individual

chips stated in terms of kilobits (Kb) and megabits (Mb). Figure 2-9 should

give you a feel for KBs, MBs, GBs, and TBs.

Digital

sound information is stored temporarily in the RAM chips on this circuit board

(center) which enables stereo sound output from a PC. Sound information can

be stored permanently on magnetic disk or optical laser disk (left). ATI

Digital

sound information is stored temporarily in the RAM chips on this circuit board

(center) which enables stereo sound output from a PC. Sound information can

be stored permanently on magnetic disk or optical laser disk (left). ATI